[Sam Battle] known on YouTube as [Look Mum No Computer] is mostly known as a musical artist, but seems lately to have taken a bit of shine to retro telecoms gear, and this latest foray is into the realm of the minicom tty device which was a lifeline for those not blessed with ability to hear well enough to communicate via telephone. Since in this modern era of chatting via the internet, it is becoming much harder to actually find another user with a minicom, [Sam] decided to take the human out of the loop entirely and have the minicom user talk instead to a Raspberry Pi running an instance of MegaHal, which is 1990s era chatterbot. The idea of this build (that became an exhibit in this museum is not obsolete) was to have an number of minicom terminals around the room connected via the internal telephone network (and the retro telephone exchange {Sam] maintains) to a line interface module, based upon the Mitel MH88422 chip. This handy device allows a Raspberry Pi to interface to the telephone line, and answer calls, with all the usual handshaking taken care of. The audio signal from the Mitel interface is fed to the Pi via a USB audio interface (since the Pi has no audio input) module.

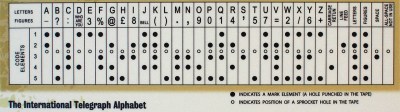

The minicom speaks Baudot code, to encode the characters typed into an audio stream that the other end can decode, so this needed to be handled by the Pi also. Since the code itself dates from the 1870s, this was likely not a huge deal to implement. MegaHal uses a model based on a hidden Markov model which can be considered an example of an AI system, depending on your viewpoint. MegaHal’s model can generate (sometimes!) intelligible sentences from some input text, after being trained on an appropriate data set. [Sam]’s collaborator [MarCNeT] used lyrics from [Sam]s’ songs for the training purpose. With the minicom-to-MegaHal interfacing done, that was not enough for [Sam] so he added an additional interface to his slightly terrifying Kosmo creation, to add some teeth and eye movement into the mix. A sparkfun audio trigger gives Kosmo its voice, although we reckon the Pi could have probably managed to do that as well. If you want to follow along with the design process, you can read the discourse transcript, and that’s not enough and you’re close enough, you could pop over to this Museum is (Not) Obsolete, and check it out in person.

a huge deal to implement. MegaHal uses a model based on a hidden Markov model which can be considered an example of an AI system, depending on your viewpoint. MegaHal’s model can generate (sometimes!) intelligible sentences from some input text, after being trained on an appropriate data set. [Sam]’s collaborator [MarCNeT] used lyrics from [Sam]s’ songs for the training purpose. With the minicom-to-MegaHal interfacing done, that was not enough for [Sam] so he added an additional interface to his slightly terrifying Kosmo creation, to add some teeth and eye movement into the mix. A sparkfun audio trigger gives Kosmo its voice, although we reckon the Pi could have probably managed to do that as well. If you want to follow along with the design process, you can read the discourse transcript, and that’s not enough and you’re close enough, you could pop over to this Museum is (Not) Obsolete, and check it out in person.

AI related shenanigans are not rare in these parts. here’s a fun clickbait generator, then there’s a way to get Linux to do what you mean, not necessarily what you say, and finally, if this is just too far fetched and not practical enough for you, you could just hack your coffee maker to learn to steam up for you when you most need it.

0 Commentaires