There’s a dreaded disease that’s plagued Internet Service Providers for years. OK, there’s probably several diseases, but today we’re talking about bufferbloat. What it is, how to test for it, and finally what you can do about it. Oh, and a huge shout-out to all the folks working on this problem. Many programmers and engineers, like Vint Cerf, Dave Taht, Jim Gettys, and many more have cracked this nut for our collective benefit.

When your computer sends a TCP/IP packet to another host on the Internet, that packet routes through your computer, through the network card, through a switch, through your router, through an ISP modem, through a couple ISP routers, and then finally through some very large routers on its way to the datacenter. Or maybe through that convoluted chain of devices in reverse, to arrive at another desktop. It’s amazing that the whole thing works at all, really. Each of those hops represents another place for things to go wrong. And if something really goes wrong, you know it right away. Pages suddenly won’t load. Your VoIP calls get cut off, or have drop-outs. It’s pretty easy to spot a broken connection, even if finding and fixing it isn’t so trivial.

That’s an obvious problem. What if you have a non-obvious problem? Sites load, but just a little slower than it seems like they used to. You know how to use a command line, so you try a ping test. Huh, 15.0 ms off to Google.com. Let it run for a hundred packets, and essentially no packet loss. But something’s just not right. When someone else is streaming a movie, or a machine is pushing a backup up to a remote server, it all falls apart. That’s bufferbloat, and it’s actually really easy to do a simple test to detect it. Run a speed test, and run a ping test while your connection is being saturated. If your latency under load goes through the roof, you likely have bufferbloat. There are even a few of the big speed test sites that now offer bufferbloat tests. But first, some history.

History of Collapse

The Internet in the 1980s was a very different place. The Domain Name System replaced hosts.txt as the way hostname to IP resolution was done in 1982. January 1st, 1983, the ARPANET adopted TCP/IP — the birthday of the Internet. By 1984, there was a problem brewing, and in 1986 the Internet suffered a heart attack in the form of congestion collapse.

In those days, cutting edge local networks were running at 10 megabits per second, but the site-to-site links were only transferring 56 kilobytes per second at best. Late 1986, links suddenly saw extreme slowdowns, like the 400 yard link between Lawrence Berkeley Laboratory and the University of California at Berkeley. Instead of 56 Kbps, this link was suddenly transferring at an effective 40 bits per second. The problem was congestion. It’s a very similar model to what happens when too many cars are on the same stretch of highway — traffic slows to a crawl.

The 4.3 release of BSD had a TCP implementation that did a couple interesting things. First, it would start sending packets at full wire speed right away. And second, if a packet was dropped along the way, it would resend it as soon as possible. On a Local Area Network, where there’s a uniform network speed, this works out just fine. On the early internet, particularly this particular Berkeley link, the 10 Mb/s LAN connection was funneled down to 32 kbps or 56 kbps.

To deal with this mismatch, the gateways on either side of the link has a small buffer, roughly 30 packets worth. In a congestion scenario, more than 30 packets back up at the gateway, and the extra packets were just dropped. When packets were dropped, or congestion pushed the round trip time beyond the timeout threshold, the sender immediate re-sent — generating more traffic. Several hosts trying to send too much data over the too-narrow connection results in a congestion collapse, a feedback loop of traffic. The early Internet unintentionally DDoS’d itself.

The solution was a series of algorithms added to BSD’s TCP implementation, which have now been adopted as part of the standard. Put simply, in order to send as quickly as possible, traffic needed to be intelligently slowed down. The first technique introduced was slow start. You can see this still being used when you run a speed test, and the connection starts at a very slow speed, and then ramps up quickly. Specifically, only one packet is sent at the start of transmission. For each received packet, an acknowledgement packet (an ack) is returned. Upon receiving an ack, two more packets are sent down the wire. This results in a quick ramping up to twice the maximum rate of the slowest link in the connection chain. The number of packets “out” at a time is called the congestion window size. So another way to look at the issue is that each round-trip success increase the congestion window by one.

Once slow-start has done its job, and the first packet is dropped or times out, the TCP flow transitions to using a congestion avoidance algorithm. This one has a emphasis on maintaining a stable data rate. If a packet is dropped, the windows is cut in half, and every time a full window’s worth of packets are received, the window increases by one. The result is a sawtooth graph that is constantly bouncing around the maximum throughput of the entire data path. This is a bit of an over-simplification, and the algorithms have been developed further over time, but the point is that rolling out this extension to TCP/IP saved the internet. In some cases updates were sent on tape, through the mail, something of a hard reboot of the whole network.

Fast-Forward to 2009

The Internet has evolved a bit since 1986. One of the things that’s changed is that the price of hardware has come down, and capabilities have gone up dramatically. A gateway from 1986 would measure its buffer in kilobytes, and less than 100 at that. Today, it’s pretty trivial to throw megabytes and gigabytes of memory at problems, and router buffers are no exception. What happens when algorithms written for 50 kB buffer sizes are met with 50 MB buffers in modern devices? Predictably, things go wrong.

When a large First In First Out (FIFO) buffer is sitting on the bottleneck, that buffer has to fill completely before packets are dropped. A TCP flow is intended to slow-start up to 2x available bandwidth, very quickly start dropping packets, and slash it’s bandwidth use in half. Bufferbloat is what happens when that flow spends too much time trying to send at twice the available speed, waiting for the buffer to fill. And once the connection jumps into its stable congestion avoidance mode, that algorithm depends on either dropped packets or timeouts, where the timeout threshold is derived from the observed round-trip time. The result is that for any connection, the round-trip latency increases with the number of buffered packets on the path. And for a connection under load, the TCP congestion avoidance techniques are designed to fill those buffers before reducing the congestion window.

So how bad can it be? On a local network, your round trip time is measured in microseconds. Your time to an Internet host should be measured in miliseconds. Bufferbloat pushes that to seconds, and tens of seconds in some of the worst cases. Where that really causes problems is when it causes traffic to time out at the application layer. Bufferbloat delays all traffic, so it can cause DNS timeouts, make VoIP calls into a garbled mess, and make the Internet a painful experience.

The solution is Smart Queue Management. There’s a lot of work that’s been done on this concept since 1986. Fair queuing was one of the first solutions, making intermediary buffers smart, and splitting individual traffic flows into individual queues. When the link was congested, each queue would release a single packet at a time, so downloading an ISO over Bittorrent wouldn’t entirely crowd out your VoIP traffic. After many iterations, the the CAKE algorithm has been developed and widely deployed. All of these solutions essentially trade off a little bit of maximum throughput in order to ensure significantly reduced latency.

Are You FLENT in Bufferbloat?

I would love to tell you that bufferbloat is a solved problem, and that you surely don’t have a problem with it on your network. That, unfortunately, isn’t quite the case. For a rough handle on whether you have a problem, use the speed tests at dslreports, fast.com, or speedtest.net. Each of these three, and probably others, give some sort of latency under load measurement. There’s a Bufferbloat specific test hosted by waveform, and seems to be the best one to run in the browser. An ideal network will still show low latency when there is congestion. If your latency spikes significantly higher during the test, you probably have a case of bufferbloat.

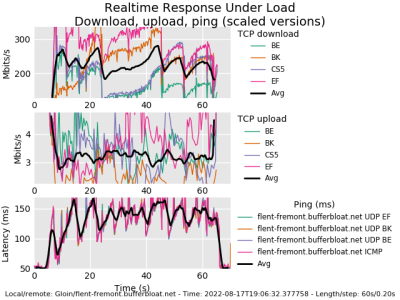

For the nerdier of us, there is a command line tool, flent, that does an in-depth bufferbloat test. I used the command, flent rrul -p all_scaled -H flent-fremont.bufferbloat.net to generate this chart, and you see the latency scaling quickly over 100 ms under load. This is running the Real Time Response under Load test, and clearly indicates I have a bit of a bufferbloat problem on my network. Problem identified, what can I do about it?

You Can Have Your Cake

Since we’re all running OpenWRT routers on our networks… You are running an open source router, right? Alternatively there are a handful of commercial routers that have some sort of SQM built-in, but we’re definitely not satisfied with that here on Hackaday. The FOSS solution here is CAKE, a queue management system, and it’s already available in the OpenWRT repository. The package you’re looking for is luci-app-sqm. Installing that gives you a new page on the web interface — under Network -> SQM QoS.

On that page, pick your WAN interface as Interface name. Next, convert your speed test results into Kilobits/second, shave off about 5%, and punch those into the upload and download speeds. Flip over to the Queue Discipline tab, where we ideally want to use Cake and piece_of_cake.qos as the options. That last tab requires a bit of homework to determine the best value, but Ethernet with overhead and 22 seem to be sane values to start with. Enable the SQM instance, and then save and apply.

And now we tune and test. On first install, the router may actually need a reboot to get the kernel module loaded. But you should see an immediate difference on one of the bufferbloat tests. If your upload or download bufferbloat is still excessive, tune that direction’s speed a bit lower by a couple percent. If your bufferbloat drops to 0, try increasing the speed slightly. You’re looking for a minimal effect on maximum speed, and a maximum effect on bufferbloat. And that’s it! You’ve slain the Bufferbloat Beast!

“Thompson Router” by Simeon W is licensed under CC BY 2.0 .

0 Commentaires