This month the media was abuzz with the announcement that the US National Ignition Facility (NIF) had accomplished a significant breakthrough in the quest to achieve commercial nuclear fusion. Specifically, the announcement was that a net fusion energy gain (Q) had been measured of about 1.5: for an input of 2.05 MJ, 3.15 MJ was produced.

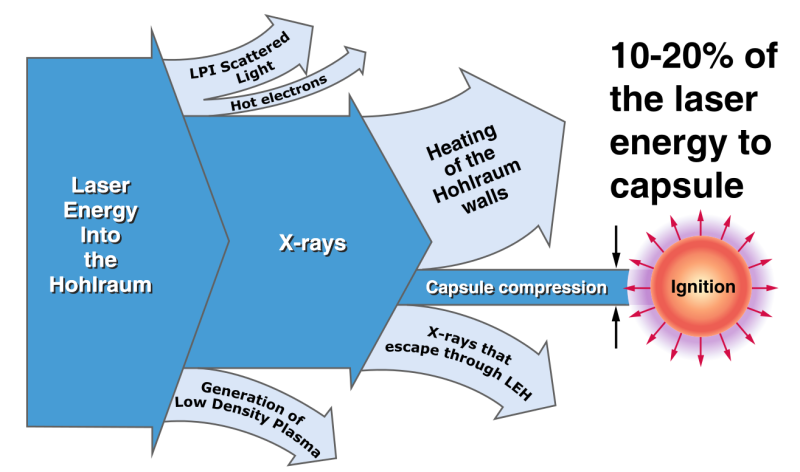

What was remarkable about this event compared to last year’s 1.3 MJ production is that it demonstrates an optimized firing routine for the NIF’s lasers, and that changes to how the Hohlraum – containing the deuterium-tritium (D-T) fuel – is targeted result in more effective compression. Within this Hohlraum, X-rays are produced that serve to compress the fuel. With enough pressure, the Coulomb barrier that generally keeps nuclei from getting near each other can be overcome, and that’s fusion.

Based on the preliminary results, it would appear that a few percent of the D-T fuel did undergo fusion. So then the next question: does this really mean that we’re any closer to having commercial fusion reactors churning out plentiful of power?

Science Communication

As the eternal jib goes, nuclear fusion is always a decade away, ever since its discovery a hundred years ago. What is sadly missing in a lot of the communication when it comes to fundamental physics research and development is often the deeper understanding of what is happening, and what any reported findings mean. Since we’re dealing with fundamental physics and boldly heading into new areas of plasma physics, high-temperature superconducting magnets, as well as exciting new fields in material research, all we can do is provide a solid educated guess.

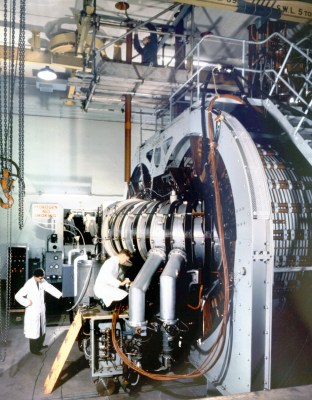

With the Z-pinch fusion reactors of the 1950s, it seemed that commercial fusion reactors were a even just a few years away. Simply pulse high currents through the plasma to induce fusion, harvest the energy and suddenly the much touted nuclear fission reactors of the time already seemed like relics of yesteryear. With initially high Q numbers reported for Z-pinch fusion reactors, the newspapers ran the headlines in the absolute certainty that the UK would be building the first fusion reactors, with the rest of the world to follow.

Later it was discovered that the measurements had been off, and that neither the assumed fusion gain had been as astounding as reported, nor had anyone been aware of the seriousness of plasma instabilities in this type of reactor that complicated their use. It wasn’t until the Russian tokamak design, which added an electromagnetic field around the plasma, that it seemed that these plasma dynamics could now be dealt with.

Although an alternate solution existed at the time in the form of stellerators, these require a rather complex geometry that follows the plasma field, rather than constricting it. This meant that they didn’t become attractive until the 1990s when computer simulation power was good enough to model the requisite form of such a reactor. Currently, the Wendelstein 7-X (W7-X) stellerator is the largest and most interesting implementation of such a reactor, which has recently been fully configured with cooled diverters that should allow it to run continuously.

All of which is to say that since the 1950s a lot has happened, many theories were tried, some things stuck, while others flopped. It’s on this wobbly edge between the fields of practical and theoretical physics, as well as materials sciences and various engineering disciplines that humanity is moving ever closer to making a practical commercial fusion reactor work.

Inertial Confinement Fusion Is A Laggard

The NIF at Lawrence Livermore National Laboratories (LLNL) uses laser-based inertial confinement fusion (ICF) which essentially means that the D-T fuel is held in place while it gets blasted in order to achieve fusion. At its core this isn’t significantly more complicated than other fusion reactor concepts, all of which tend to use D-T fuel in the following reaction:

As part of the fusing of the two hydrogen nuclei, a significant amount of energy is released, which can be captured in order to create steam and drive a generator. Meanwhile the helium waste has to be removed, the high-velocity (fast) neutrons captured, and the D-T fuel replenished. When comparing this to magnetic confinement fusion (MCF) technologies such as tokamaks and stellerators, it becomes clear why ICF isn’t even in the same league.

Both tokamaks and stellerators are essentially designed as continuous use reactors, with a constantly maintained plasma flow in which deuterium and tritium nuclei fuse and contaminants are removed via the cooled divertors. Neutrons are captured by a lithium blanket that lines the inside of the reactor vessel, which causes tritium to be bred, allowing for this short-lived hydrogen isotope to be constantly replenished along with deuterium.

Ultimately, a tokamak or stellerator would be self-heating, in that they have a Q of over 15. This means that the reactor can supply the energy needed to heat its plasma, while still producing enough energy to run the generator, or similar. This would make such a reactor essentially self-sustaining, none of which applies to an ICF system like the NIF. It requires the production of its special D-T fuel pellets, and insertion of each pellet into the ignition chamber. This makes continuous operation rather cumbersome.

In terms of net energy production, the NIF also doesn’t look very good. Whereas for example the UK’s JET tokamak has reached a Q of about 0.65 (below break-even), when the approximately 422 MJ input power for an NIF shot is taken into account, the produced 3.15 MJ is paltry, indeed.

Putting A Price Tag On Research

During the Cold War era, the R&D budget for nuclear fusion research was rather significant, at least partially enabled by the lingering fear that possibly the Other Side might manage to tame this incredible new source of power first, also helped by the fascinating insights gained in how thermonuclear weapons might be tweaked and maintained more optimally.

When the Cold War ended and the 1990s rolled around, nuclear fusion research found its R&D budget hollowed out to the point where most of it ground to a screeching halt. These days nuclear fusion research is doing significantly better, with many nations running MCF research programs. The majority of these are tokamaks, followed by stellerators with the Ukrainian Uragan-2M and German Wendelstein 7-X being prominent examples. The rest are ICF devices, which are notably used for fundamental research on fusion, not energy production.

In this context, if we look at the NIF’s 3.15 MJ, it should be clear that we have not suddenly entered the age of commercial nuclear fusion reactors, nor that we’re on the verge of one. What it does mean, however, is that this particular ICF facility has achieved something of note, namely limited fusion ignition. In how far this will be helpful in getting us closer to commercial fusion reactors should become clear over the coming years.

What is beyond question is that putting a price tag on fundamental research makes little sense. The goal of such research and potential is after all to increase our understanding of the world around us, and to make life easier for everyone based on this improved understanding. Considering the wide range of responses to these recent NIF findings it does raise the question in how far the fundamentals of nuclear fusion research are at all being communicated with the general public.

0 Commentaires