In the days of CRT displays, the precise synchronization between source and display meant that the time between a video line appearing at the input and the dot writing it to the screen was constant, and very small. Today’s display technologies deliver unimaginable resolutions compared to the TV your family had in the 1970s, but they do so at the expense of all their signal processing imposing a much longer delay before a frame is displayed. This can become an issue for gamers, but also with normal viewing, because in some circumstances the delay can be long enough for it to be audible in a disconnect between film and soundtrack. It’s something [Mike Kibbel] has addressed with his video input delay meter, and it makes for a very interesting project.

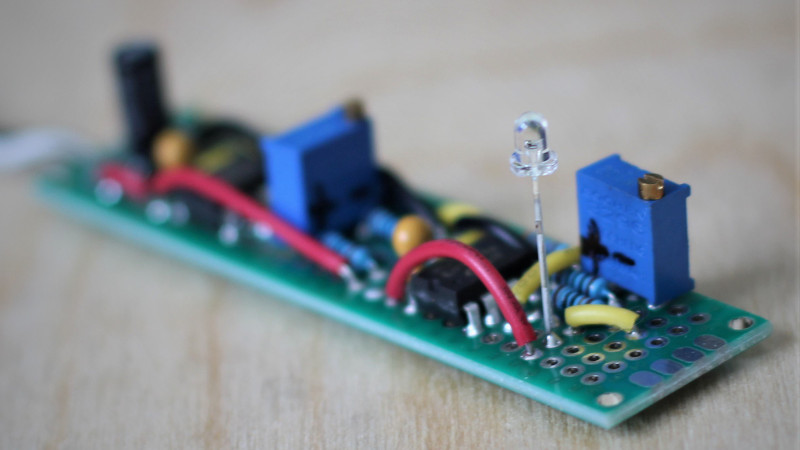

At its heart is an FPGA, and in the video below the break he goes into great detail about its programming. It both generates a DVI output to drive the monitor and performs the measurement. The analog to digital converter side of the circuit is interesting, he has a photodiode and an op-amp driving a comparator to form a simple 1-bit converter. He takes us through the design process in detail, with such useful little gems as the small amount of hysteresis applied to the comparator.

There are probably many ways this project could have been implemented, but this one is both technically elegant and extremely well documented. Definitely worth a look!

0 Commentaires