One of the persistent challenges in audio technology has been distinguishing individual voices in a room full of chatter. In virtual meeting settings, the moderator can simply hit the mute button to focus on a single speaker. When there’s multiple people making noise in the same room, though, there’s no easy way to isolate a desired voice from the rest. But what if we ‘mute’ out these other boisterous talkers with technology?

Enter the University of Washington’s research team, who have developed a groundbreaking method to address this very challenge. Their innovation? A smart speaker equipped with self-deploying microphones that can zone in on individual speech patterns and locations, thanks to some clever algorithms.

Robotic ‘Acoustic Swarms’

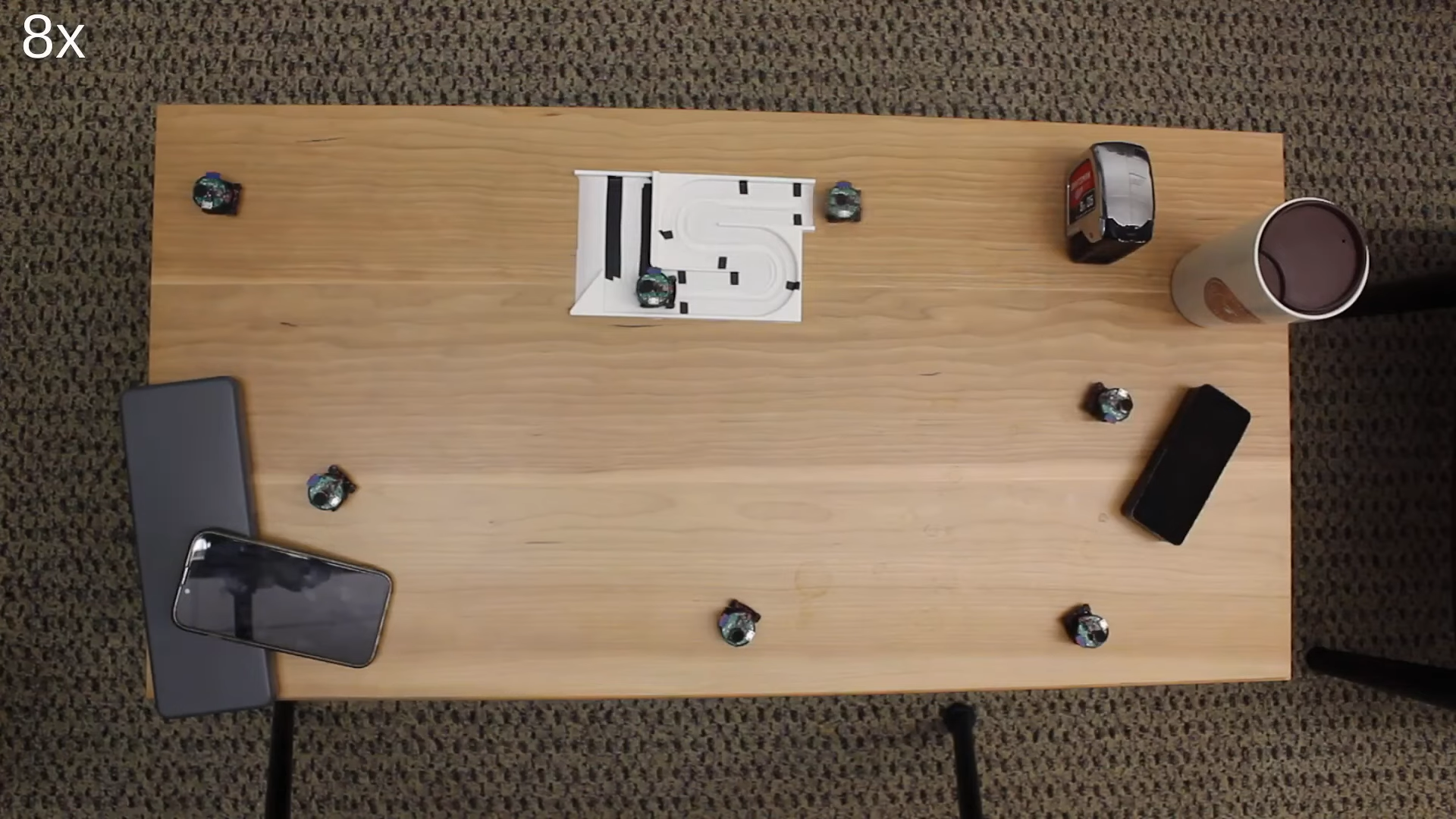

The system of microphones is reminiscent of a swarm of pint-sized Roombas, which spring into action by deploying to specific zones in a room. Picture this: during a board meeting, instead of the usual central microphone setup, these roving mics would take its place, enhancing the control over the room’s audio dynamics. This robotic “acoustic swarm” can not only differentiate voices and their precise locations in a room, but it achieves this monumental task purely based on sound, ditching the need for cameras or visual cues. The microphones, each roughly an inch in diameter, are designed to roll back to their charging station after usage, making the system easily transportable between different environments.

The system of microphones is reminiscent of a swarm of pint-sized Roombas, which spring into action by deploying to specific zones in a room. Picture this: during a board meeting, instead of the usual central microphone setup, these roving mics would take its place, enhancing the control over the room’s audio dynamics. This robotic “acoustic swarm” can not only differentiate voices and their precise locations in a room, but it achieves this monumental task purely based on sound, ditching the need for cameras or visual cues. The microphones, each roughly an inch in diameter, are designed to roll back to their charging station after usage, making the system easily transportable between different environments.

The prototype comprises of seven miniature robots, functioning autonomously and in sync. Using high-frequency sound, much like bats, these robots navigate their way around tables, avoiding dropoffs and positioning themselves to ensure maximum audio accuracy. The goal is to maintain a significant distance between each individual robotic unit. This spacing increases the system’s ability to mute and create specific audio zones effectively. The sound from an individual speaker will reach each microphone at different times. Thus, the greater distance between microphones makes it easier to triangulate that person’s location and filter them out from the pack. Regular smart speakers often have arrays of many microphones, but as they are separated by only a few inches at most, they generally can’t achieve the same feat.

“If I have one microphone a foot away from me, and another microphone two feet away, my voice will arrive at the microphone that’s a foot away first. If someone else is closer to the microphone that’s two feet away, their voice will arrive there first,” explained by paper co-author Tuochao Chen. “We developed neural networks that use these time-delayed signals to separate what each person is saying and track their positions in a space. So you can have four people having two conversations and isolate any of the four voices and locate each of the voices in a room,” said Chen.

Tested across kitchens, offices, and living rooms, the system is capable of differentiating voices situated within 1.6 feet of each other 90% of the time, without any prior information about how many speakers are in the room. Currently, it takes roughly 1.82 seconds to process 3 seconds of audio. This delay is fine for livestreaming, but the additional processing time makes it undesirable for use on live calls at this stage.

The technology promises to have great application in a variety of fields. A smart homes equipped with a well-spread microphone array could permit vocal commands solely from individuals in designated “active zones.” A voice-activated TV could be set up to only respond to instructions from those seated on the couch. It could even allow a group to take part in a virtual conference from a noisy cafe without everyone having to put on a microphone. It’s an edge case, sure, and you’d still need headphones, but someone’s likely to try it, right?

The team hopes to further develop the concept with more capable microphone ‘bots that can move around a room, not just a table. The team is also exploring using the robots to emit sounds to create “mute” and “active” zones in the real world, which would allow people in different parts of the same room to hear their own audio feed.

While the technology is still in an early research stage, we kind of love the idea. Many a corporate meeting could be livened up with a few cute robots skittering around, even if it’s ostensibly for the purpose of capturing better audio.

0 Commentaires