For many, the voice assistants are helpful listeners. Just shout to the void, and a timer will be set, or Led Zepplin will start playing. For some, the lack of flexibility and reliance on cloud services is a severe drawback. [John Karabudak] is one of those people, and he runs his own voice assistant with an LLM (large language model) brain.

In the mid-2010’s, it seemed like voice assistants would take over the world, and all interfaces were going to NLP (natural language processing). Cracks started to show as these assistants ran into the limits of what NLP could reasonably handle. However, LLMs have breathed some new life into the idea as they can easily handle much more complex ideas and commands. However, running one locally is easier said than done.

A firewall with some muscle (Protectli Vault VP2420) runs a VLAN and NIPS to expose the service to the wider internet. For actually running the LLM, two RTX 4060 Ti cards provide the large VRAM needed to load a decent-sized model at a cheap price point. The AI engine (vLLM) supports dozens of models, but [John] chose a quantized version of Mixtral to fit in the 32GB of VRAM he had available.

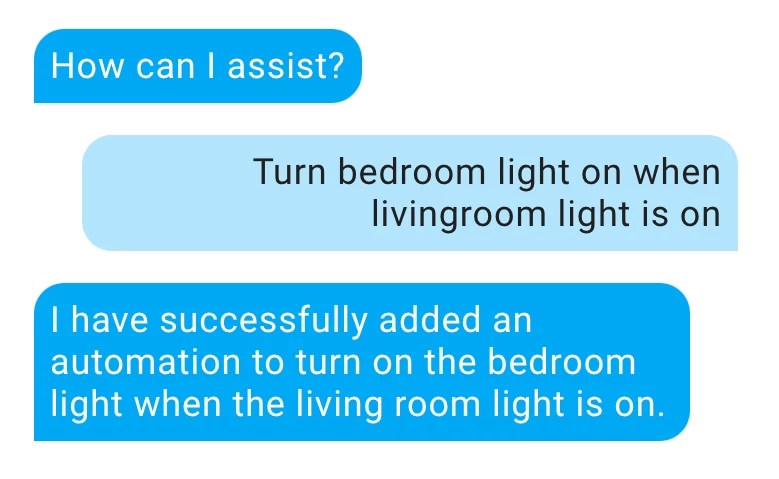

However, how do we get the model to control devices in the home? Including the home state in the system portion of the prompt was easy. Getting instructions for HomeAssistant to follow is harder. While other models support “Function calling,” Mixtral does not. [John] tweaked the wrapper connecting vLLM and HomeAssistant to watch for JSON at the end. However, the model liked to output JSON at the end even if it wasn’t asked to. So [John] added a tag that the model adds when the user has a specific intent.

In the end, [John] has an intelligent assistant that controls his home with far more personality and creativity than anything you can buy now. Plus, it runs locally and can be customized to his heart’s content.

It’s a great guide with helpful tips to try something similar. Good hardware for a voice assistant can be hard to come by. Perhaps someday we’ll get custom firmware running on the existing assistants we already have to connect to your local assistant server?

0 Commentaires